Exploring the Forefront of Augmented Reality Design

Liminal was an Augmented Reality Research Lab funded by Samsung Research America to understand what was possible in the consumer AR headset space given the technology available at the time. This project kicked off in 2015 before the Hololense was announced, predating many of the AR headsets we see today. This work was done over a brief 10 month period.

UNDERSTANDING THE PROBLEM SPACE & MEDIUM

//RESEARCH,CONCEPTING.PROTOTYPING

At the time we were still in the very early stages of AR as a technology, but we knew where we wanted to go. A display that could be worn all day like a pair of glasses. We started by understanding where the industry was on that path and where we could start.

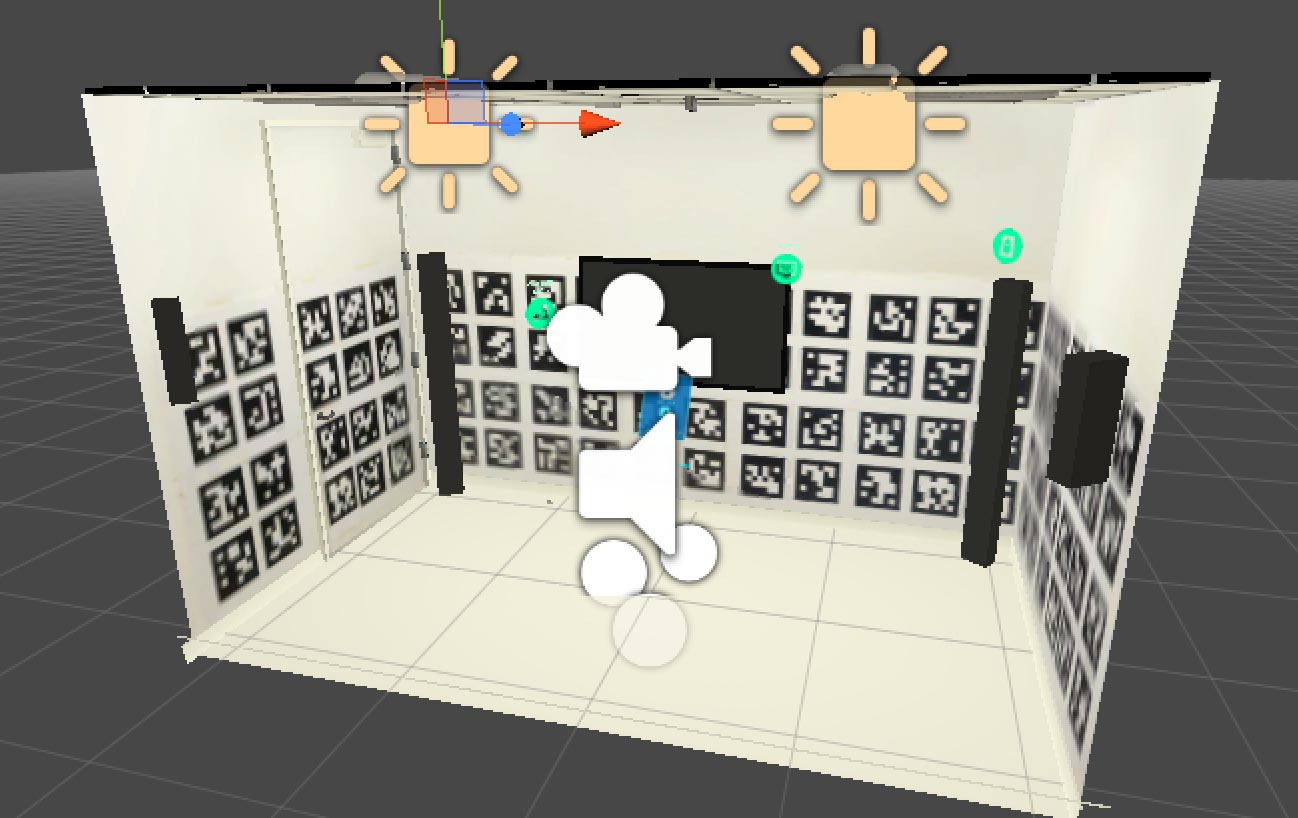

We decided to start prototyping using an Oculus DK2 because it allowed us to experiment with our own tracking solutions and try a variety of innovative interactions. I modeled our demo room in excrutiating detail, then aligned the digital model in VR to perfectly match the real world. This allowed us to prototype convincing AR interactions very quickly.

We explored intuitive gestural interactions using the leap motion. We found that the more physical and responsive we could make the objects you interacted with, the more "real" they felt.

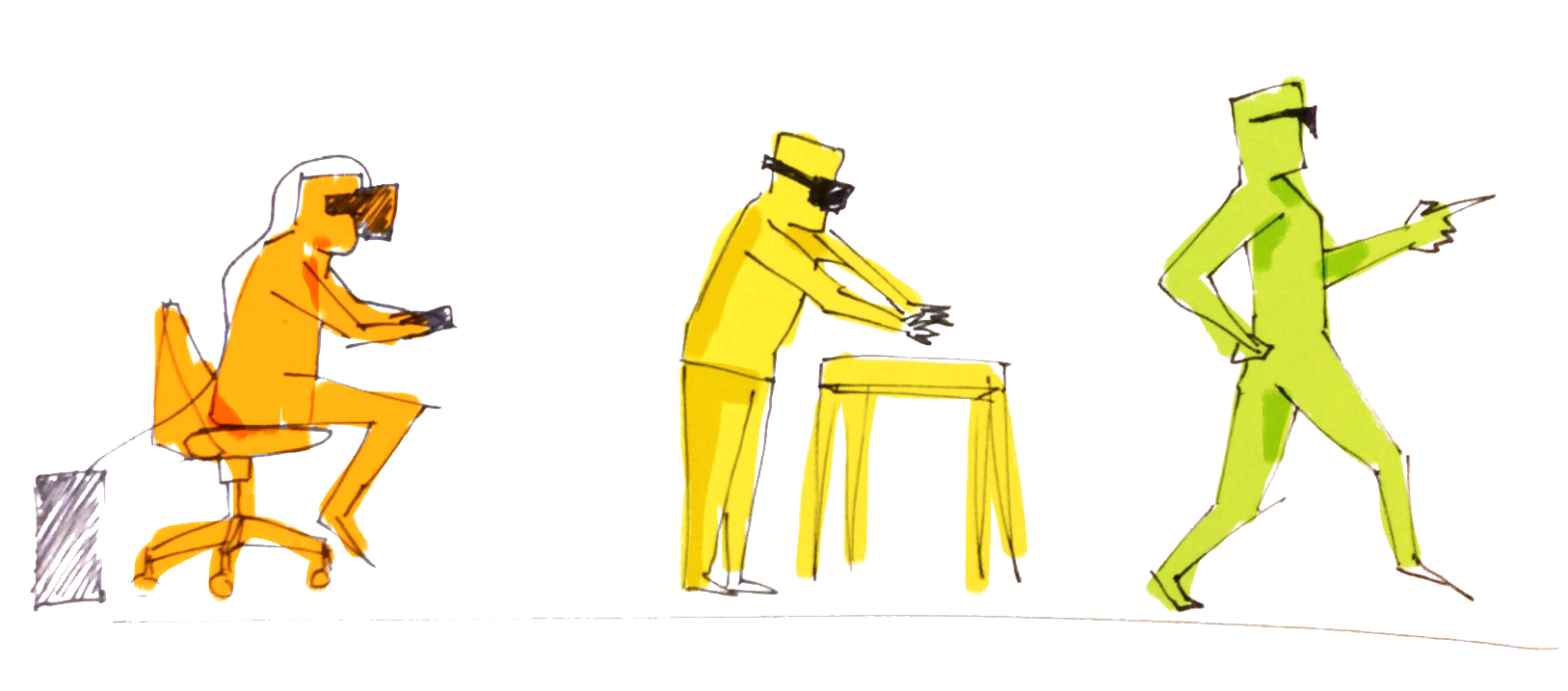

We also explored new techniques for wireframing use cases. We built a set of isometric illustrations that we could use to itterate room layouts and describe digital object placement. We could then quickly use these as specs for clickthrough mockups using our room matched VR setup. This allowed us to get in headset feedback from users quickly.

PHASE 1 LEARNINGS

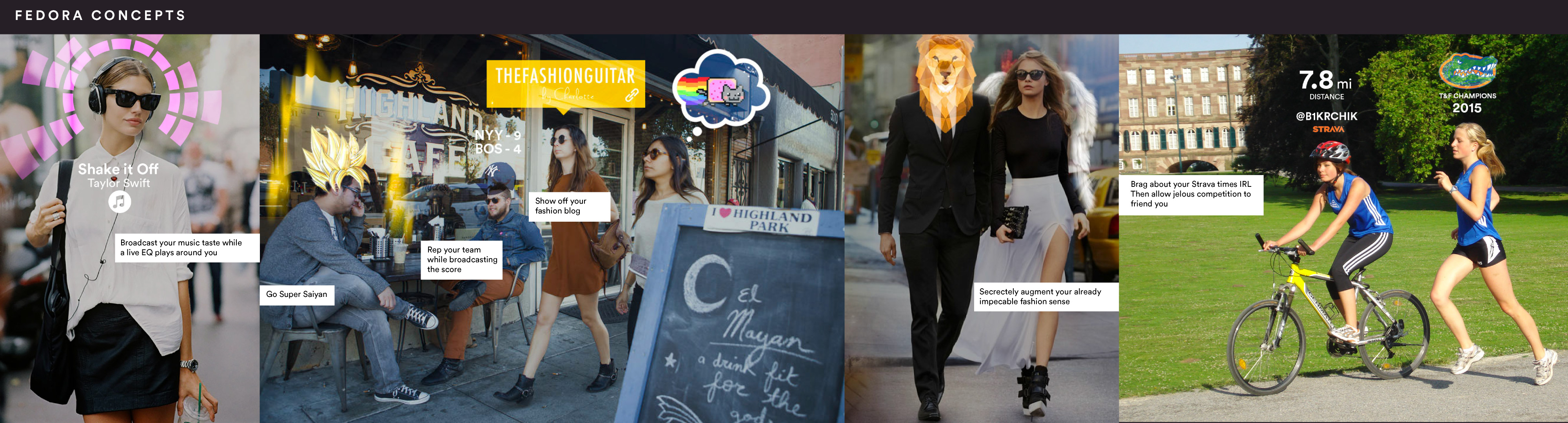

Our first takeaway from a design perspective was that display of contextual information was the killer app, and that the interaction model was more of a nice to have. In some cases, users were even embarrassed making large

sweeping gestures in front of others who couldn't see what they saw. This led to our second conclusions, that AR was inherently social. Unlike VR, you see the other people in the room or on the street. This makes sharing that experience all the more important.

EXPLORING POTENTIAL PRODUCTS

//INDUSTRIAL_DESIGN.CONCEPTING.INTERACTION_DESIGN

Based on our learnings we came to a similar conclusion to many of the big players who have entered the space since. Waveguide displays with the latest tracking was going to get us the closest to the dream of AR glasses. This tech comes with limitations, and we took a uniquely design-oriented approach to address these challenges.

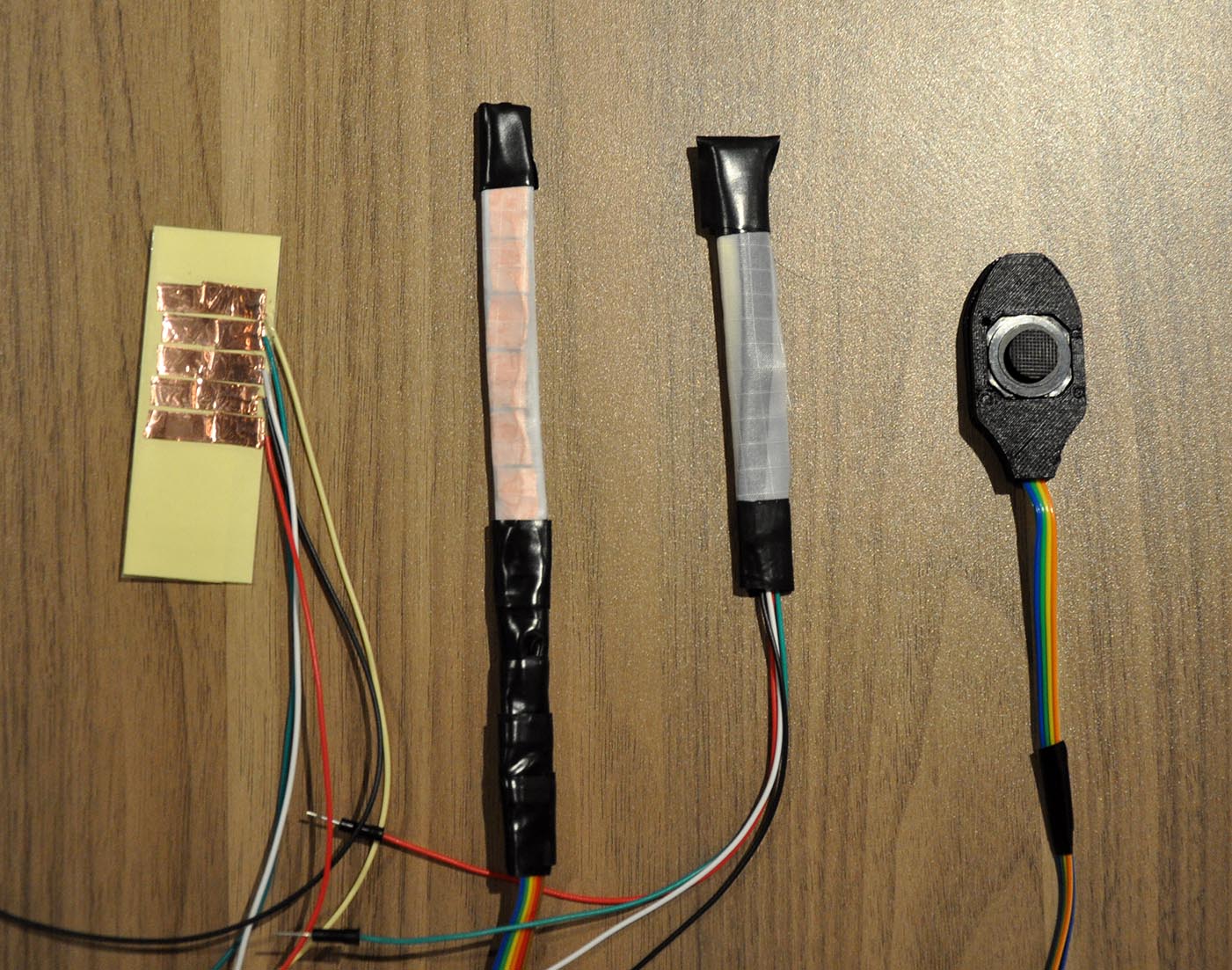

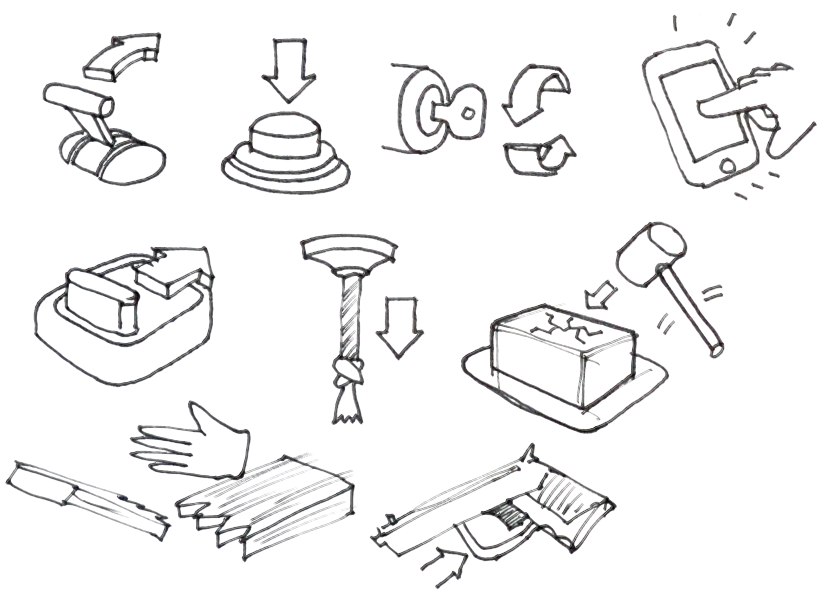

We first needed a platform to build off of that might enable the social & contextual uses we had in mind. I explored options for an industrial design that could comfortably worn in public settings without drawing too much attention. The compute would be offloaded through a cable to a device you could stash in your pocket. I explored a variety of interfaces that could allow subtle control along the cord, prototyping both the hardware and software interfaces.

We began exploring ideas that leveraged AR's strengths including contextual information, spacial understanding, and digitized extentensions of smart objects. What we quickly realized was that when this information was confined to a single person's view it was just as isolating as virtual reality. Social immersive computing was AR's strength and we started leaning into social and collaborative use cases.

We put all of these learnings together into one of the first multi-user AR HMD demos. While wearing laptop backpacks two users could experience and interact with the same AR scenes. You could tell the hardware had a long way to go (LOL) but for the first time we were able to experience a glimmer of social spatial computing.

PHASE 2 LEARNINGS

We explored an extensive number of use cases in this phase with various hardware implementations. We realized that transparent displays were neccesary but the technology just wasn't there yet. The hardware was clunky and low res, with a limited field of view. There just weren't many compelling

consumer use cases with the limited set of technology. This observation has mostly been born out in the market, with Microsoft and Magic Leap continuing to struggle to find consumer success. We decided to shift our focus, looking for a market and form factor that could help bootstrap this next generation of computing.

FOCUSING ON A MARKET

//INDUSTRIAL_DESIGN.GRAPHIC.UX_DESIGN

We began looking for a use case that didn't require the AR glasses to be worn all the time and that could get by with more rudimentary tracking. We started to get really interested in the potential for a tool for the outdoors. Similar to a pair of binoculars it could be held up only when information was needed, and it was a metaphor everyone already understands.

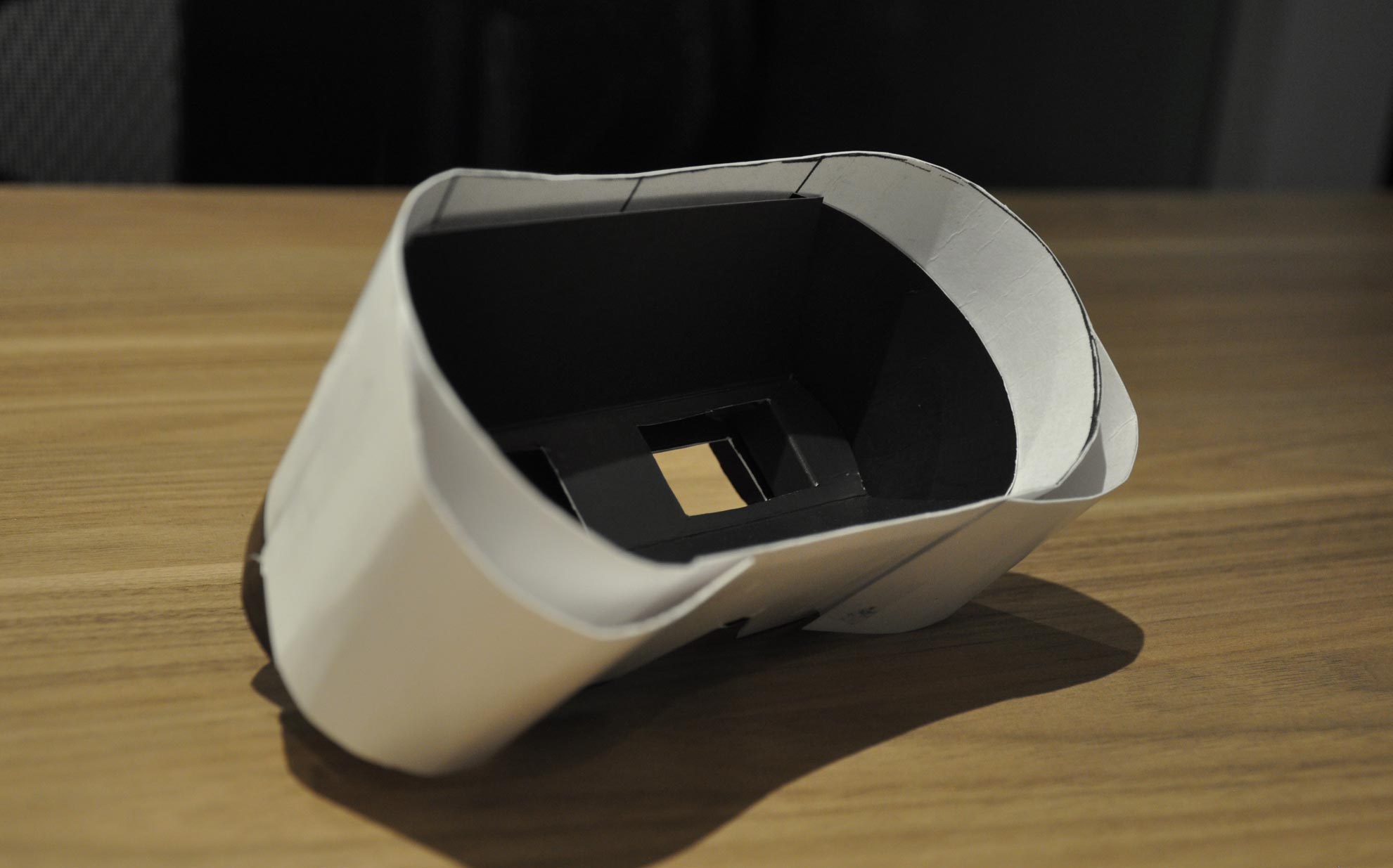

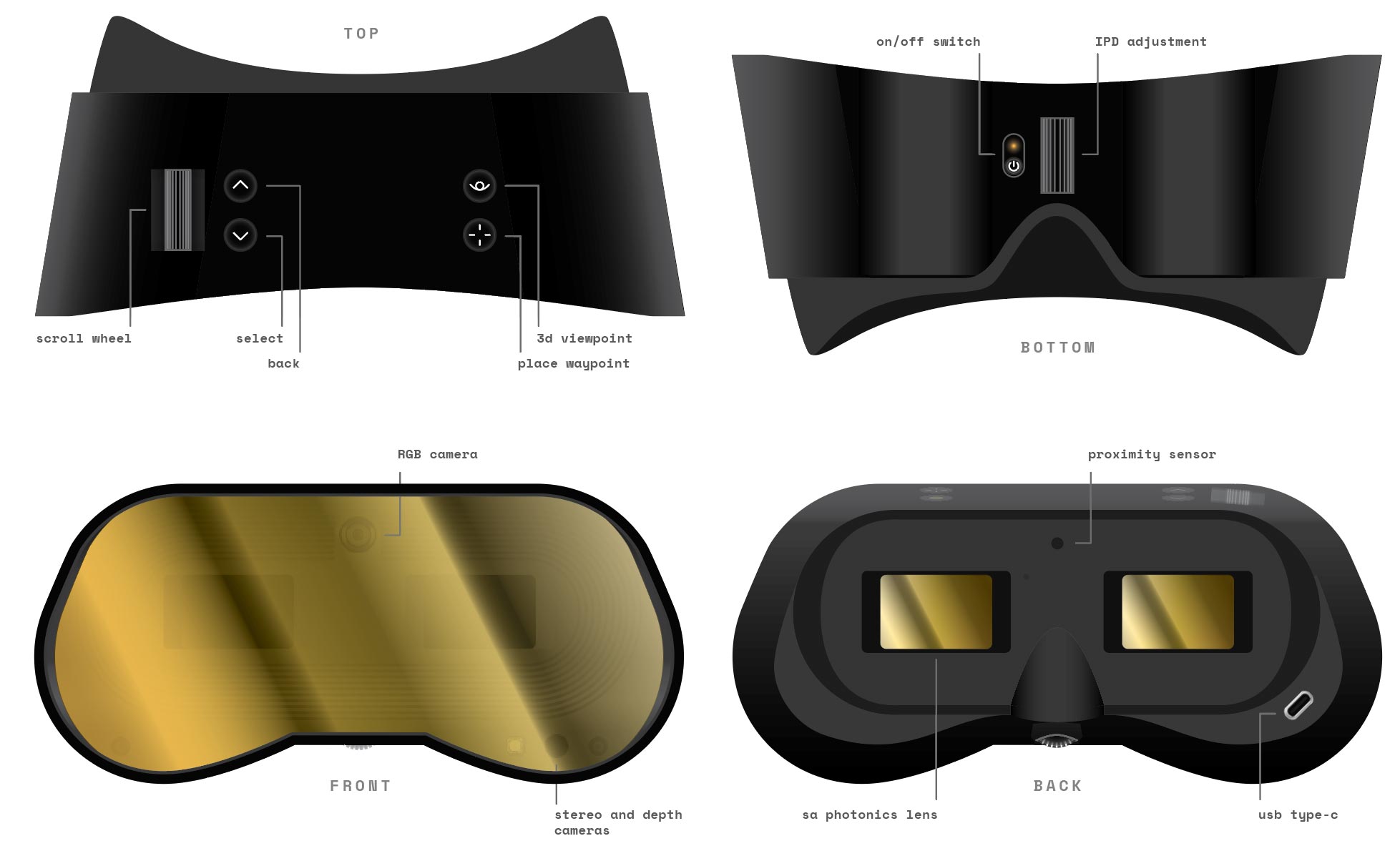

We started exploring form factors that could carry the displays we had been prototyping with for our glasses. I mocked up rough prototypes in chipboard to test out a variety of ergonomic shapes. We then started exploring a look and layout for all the compenents and buttons.

The new narrowed focus made it much easier to settle on a small set of features for the MVP. We interviewed hikers and frequent travelers and started exploring ways to display information like hiking trails, elevation, weather, and attractions.

PHASE 3 LEARNINGS

While the use cases were compelling we decided that the product was a bit too niche for the investment required, and it might make more sense to wait until technology, especially in displays, improved. That said, I think it clarified

for me that the first successfull AR glasses or headsets will probably be for a very specific use case. Once that helps bootstrap and lower the cost of the technology we'll finally have a more obvious path to consumer AR glasses.

Selected Works

VR.DESIGN+MOBILE.DESIGN+DESIGN.MANAGEMENTAs a founding member of Facebook VR design I helped build a thriving VR ecosystem

INTERACTION.DESIGN+PROTOTYPING+INDUSTRIAL.DESIGNSamsung Research invested in our small team to explore the potential use cases for AR glasses

INDUSTRIAL.DESIGN+METAL.WORKING+GAME.DESIGNThrough my time at RISD I worked on a variety of projects with NASA that eventually led me to VR

MOBILE.DESIGN+WEB.DESIGN+GRAPHIC.DESIGNI love contributing my skills to charities

INDUSTRIAL.DESIGN+WOODWORKINGA way of keeping up my craft

GAME.DESIGN+3D.MODELING+PROTOTYPINGDesign should be fun

Wanna collab? Hit me up

©_©

©_©